Passwords still remain the principal security mechanism to protect information assets. To make passwords harder to guess, security policies usually specify baseline requirements concerning password length (say at least 6 characters), composition rules (say all alphanumeric characters with at least one digit) and further content guidelines on avoiding dictionary words and personal references. The intent of such policies is to gain some assurance that users will not select passwords, either willingly or unintentionally, from a potentially small fraction of all possible passwords.

An interesting approach to specifying password policies is taken by NIST in the

Electronic Authentication Guide (SP800-63), a document which has been evolving since early 2006. In SP800-63 entropy-based arguments are used to create password policies that provide a bound on the maximum number of password guesses that an attacker can make while still having only a small probability of success. Entropy is a classical measure of information content of an event with an uncertain outcome. In SP800-63 the approach is to specify policies which yield passwords with a minimum amount of entropy, and then to translate this uncertainty measure into a minimum amount of work (that is, guesses) that must be performed by an attacker to recover the password.

The SP800-63 approach to Password Policies

The main threat being addressed SP800-63 is online password guessing, assuming that the attacker has online access to the target system or (web) application for the lifetime of the password (typically several months at least). This focus seems to hark back to the 1985 US DoD Password Management Guidelines which considered resistance to online guessing as the fundamental metric of password security.

The SP800-63 approach to selecting passwords can be outlined in the following 5 steps.

-

Set a threshold of success for an online attack to 2^{-k}

-

Model the textual properties of user selected passwords as English text.

-

Use known results on entropy estimates for English to select password policies that yield a minimum amount of entropy in user passwords.

-

Derive a bound M on the number of online guesses for an attacker to recover a password generated according the policies in Step 3 and the threshold in Step 1.

-

Verify that the attacker will be limited to a number of online guesses m such that m < M.

In Step 1 a threshold is set for the success of online guessing attacks which is acceptable to the system owner. As suggested by SP800-63 this value should be at least k = 10 (about 1 in 1000) and preferably k = 14 (about 1 in 16,000). These probabilities are taken over the number of possible guesses that the attacker can make over the lifetime of the password. If these odds look too high for the attacker then the system designer should use a stronger authentication mechanism.

In Step 2 it is assumed that user-selected passwords have the same characteristics as English text. This is a somewhat conservative (even reasonable some might say) and permits previously known results on the entropy of English to be applied The basic results here are due to the experiments of Claude Shannon, the inventor of information entropy, and many other significant discoveries. Based on these results, password policies are selected to ensure that user passwords will contain a minimum amount of entropy.

In Step 3 the entropy implied in password policies is converted into work (the number M of online guesses) to recover a password according to the bound stated in Step 1. Finally in Step 5 the system is designed to ensure that the number of possible online guesses m over the lifetime of the password is less than M.

To clarify this approach, we recount an example from Appendix 1 of SP800-63. Consider a password policy that mandates passwords of length 8, selected from the 94 printable keyboard characters, required to include at least one lower case, upper case, digit and special character, and is finally scanned to remove dictionary words. SP800-63 asserts that such passwords contain at least 30 bits of entropy, and conclude that if the number of online guesses mounted by an attacker is less than 2^{16}, then the likelihood that the password will be guessed is less 2^{-14}.

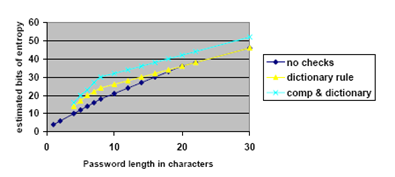

In this case passwords will be considered secure against online guessing if the attacker can be limited to less than about 64,000 guesses over the lifetime of the password. The graph below and its associated table in Appendix 1 of SP800-63 show measures of entropy for passwords up to length 30.

Such arguments may end up being influential with security policy makers as exemplified by How Strong is Your Password? NIST has some formulas. For example, the US Railroad Retirement Board includes the following statement in its authentication policies (level 2 corresponds to a threshold of k = 14)

For level 2 protection against on-line guessing, NIST recommends guessing entropy of 30. Guessing entropy is an indication of the amount of work to determine, or guess, a password. Alternately, NIST indicates that any system that required passwords to be changed at least every two years and limited trials by locking an account for 24 hours after six failed attempts would satisfy the targeted guessing attack requirements for level 2.

Converting Entropy into Guessing Work

This seems like magic – pump up the entropy in passwords and you get resistance to online guessing attacks. To understand what is happening here let’s make the following definitions. We will assume that there are N possible passwords defined by a policy

and that a given user U will select these passwords according to the probability distribution

Lastly let’s assume for simplicity that the passwords are ordered such that

which just means that password P1 is the mostly likely choice of the user with probability p1, password P2 is the next most likely choice with probability p2, and so on. The SP800-63 approach is to find the largest value of M such that

and to ensure that an attacker cannot make M online guesses over the lifetime of the password. Here we make the conservative assumption that an attacker is searching the passwords in the same order of likelihood as the user will select them.

The big trick in the SP800-63 analysis is to derive the value of M from the entropy in the passwords rather than finding the password distribution explicitly. Using standard definitions, the password entropy is given as

Now this does not seem to help us much. Say for example that the password entropy is determined to be 30 bits, what can we than say about the number of acceptable password guesses M? Well what SP800-63 does is to assume that a password with 30 bits of entropy represents the same difficulty to guess as a random 30-bit number. What this assumption means is that

and M can be evaluated as

.png)

So when the entropy is 30 bits and k = 14, then if the number of online guesses mounted by an attacker is less than M = 2^{16} = 2^{30 - 14}, the likelihood that the password will be guessed is less than 2^{-14}.

The Flaw of Averages

Unfortunately there is a flaw in this argument. Imagine that you have a random number generator for AES-128 keys, and that you determined that the entropy of the keys produced by the generator was only 80 bits rather than the expected 128 bits. What could you say about the probabilities of the keys being produced by such a generator?

Well it could be the case that there is a subset of 2^{80} keys that are produced uniformly (each with probability 2^{-80} ) and that the remaining keys (the vast majority) are produced with probability zero. Such a distribution would produce an entropy of 80 bits.

So what SP800-63 does in its analysis is to use the same logic but in reverse. If the set of user passwords has an entropy of 30 bits then SP800-63 concludes that there are effectively 2^{30} uniformly distributed passwords, each selected by users with probability of 2^{-30}. Trying to guess the correct password is then like trying to guess a random 30-bit number.

But having a uniform subset of 2^{30} passwords is only one possible distribution that could produce an entropy of 30 bits. It is entirely possible that there could be passwords which have a much higher probability of 2^{-30} of being selected (and others with a much lower probability) while still maintaining an overall entropy of 30 bits. And this would lead a smaller value of M as implied by the analysis above.

The problem here is that the entropy is a single summary statistic (an average in fact) computed over the password distribution, and as such, detail on the distribution itself is lost. The logic of the Sp800-63 analysis is to hypothesize into existence a uniform distribution as the source of the entropy value, when in fact other non-uniform distributions may well have been responsible. And these other non-uniform distributions may provide less security against online guessing than is implied by the hypothesized uniform distribution.

In fact it has been observed before by several authors that entropy is not a good indicator of work, which in our case means the number of guesses to recover a password. To understand this statement further you will need to take the time to read some research. The best papers on the topic are written by John O. Pliam, starting with On the Incomparability of Entropy and Marginal Guesswork in Brute Force Attacks. Eric Verhuel has done some more recent research, presented at RSA 2007.

The Gordian Knot Remains

Setting effective password policies really depends on having good knowledge of how users choose passwords, in particular, the form of the probability distribution for their password selection

In short no one seems to know how to unravel the Gordian Knot surrounding user password habits. SP800-63 attempted to get at these probabilities via entropy arguments, but in the entropy we have already lost too much information concerning the password distribution. Even so, the SP800-63 password policy recommendations are good – just take their online guessing bounds with a potentially large grain of salt. Appendix 1 of SP800-63 makes very interesting reading nonetheless.

Related Articles

.png)