- Threat Classification, Web Application Security Consortium

- Statistical-based approach to password guessing

- Clobbering the Cloud

- NIST Statistical Test Suite

- 2009 Key Management Report from True Catalyst

- iPhone Privacy presentation from Nicolas Seriot

Thursday, December 17, 2009

Recent Uploads to Scribd, Dec 17

Sunday, December 13, 2009

A USB Entropy Drive

A UK company called Simtec Electronics has created a USB thumb drive device with dedicated hardware for producing a continuous stream of high entropy bits, suitable for mixing into an existing entropy pool on your device or feeding directly into applications and protocols that require sources of randomness. The product is called Entropy Key and and can be ordered from the website for £36.00, with further discounts for bulk orders

An overview of the process for producing the entropy is given in the diagram below. There are two independent noise generators based on P-N junctions that are sampled at a high rate to produce a stream of bytes. The output of each generator and their XOR are subjected to the universal statistical bit test devised by Ueli Maurer. If the sequences pass this test then von Neumann’s debias trick is applied, and then another round of universal testing, followed by hashing with Skein. This process blocks at any stage if the computed statistics fall outside conservative estimates of the properties of random generators.

These steps are repeated until 20,000 bit have been collected, upon which the statistical tests recommended by FIPS 140-2 are applied. The 20,000 bit pool is then parcelled into blocks of 256 bits, and Simtec estimates that each such bock has been generated from about 5,000 bits of noisy hardware samples.

There is quite a reliance on Maurer’s universal statistical bit test, and perhaps justifiably so since this test is specifically designed to detect deviations from expected statistical properties in a bit generator by computing an estimate of the generator’s entropy using ideas from universal data compression algorithms. The test is quite simple, and there is a reasonable description and parameterization given in NIST SP-800 22, which also contains a description of a large number of other statistical tests. A research paper on a finer analysis of Maurer’s test can be found here.

The output rate is, by its nature, variable but an average rate of more than 30 kilobits per second is expected. The complete client daemon source is provided under an MIT license which means everyone is free to examine the code for themselves. SimTec also notes that the Entropy Key can automatically detect various different physical attacks, such as temperature changes (by using a built-in temperature sensor), and opening of the case. The device is currently undergoing testing with "select customers” but is available for general ordering. There is an IRC channel #ekey on the oftc network if you want to discuss any of this further.

(Thanks to Vincent Sanders for providing some more technical details)

Saturday, December 12, 2009

A Faraday Wallet

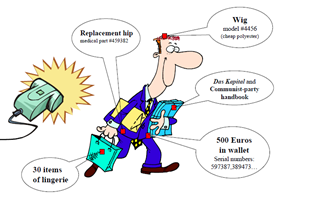

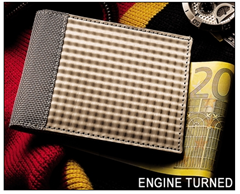

Do you remember the blocker tag? This was a proposal from researchers Ariel Jules, Ron Rivest, and Michael Szydlo, all affiliated with RSA Data Security, published (and surely patented) in 2003. It was about this time that the privacy concerns over information that could be collected about you and your habits by engaging in passive exchanges with arbitrary RFID readers was reaching its zenith. Your RFID tagged items could be passively giving away a lot information without your consent or knowledge.

Jules, Rivest, and Szydlo jokingly suggested that you could encase yourself in protective foil or mesh, which would make walking a bit more awkward but perhaps could be useful for wallets. And now we have just such a wallet, shown below.

It is advertised as an Identity Theft Preventing Privacy Wallet, retailing for $79.95. The product advertising states that

Most credit cards now contain tiny chips that provide a unique identifier that can be scanned to retrieve your billing details.

Such RFID technology can, unfortunately, also be corrupted by hackers using portable scanners in crowded airports, restaurants, and stadiums. Our slim and surprisingly lightweight Privacy Wallets are literally woven from over 20,000 super-fine strands of stainless steel into a flexible fabric that feels like silk — but protects your ID like armor plate! Stronger than leather, with no sharp edges or corners — and won’t stain or scratch.

Inside, 6 credit card slots, 2 internal slots, and a billfold let you carry plenty of purchasing power — completely shielded from today’s high-tech pickpockets.

This could go well with your swine flu suit.

Thursday, December 10, 2009

The Crypto Year in Review from Bart Preneel

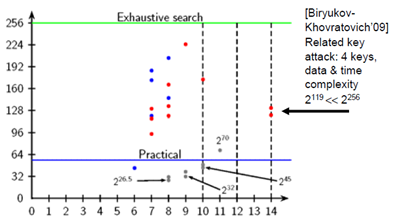

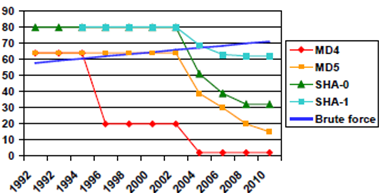

Preneel then considers hash functions, the topic of his PhD thesis. He gives a benchmark slide showing the complexity of collision attacks against well-known hash functions, assuming a $100K USD funded adversary using special hardware (equivalent to 4 million PCs).

The downward trend of the graphs is suggestive of a meltdown for hash functions, with the worst implications for protocols and signatures yet to play out. The transition path to a better hashing standard via the SHA-3 hash contest of NIST in underway, and a new standard by will be selected by 2012. However Preneel is concerned that the design of SHA-3 will be based on state-of-the-art from 2008 – that is, all of the additional insights and learnings produced in the evaluation of the SHA-3 candidates will not be exploited until SHA-4.

Monday, December 7, 2009

WPA Password Cracking in the Cloud for $34

Following on from the recent results of a project on the feasibility of password cracking using cloud computing, a new cloud service for cracking WPA passwords has been announced by researcher Moxie Marlinspike, best known for his work on discovering subtle flaws in the SSL protocol. The cloud service attempts to crack uploaded passwords against a 135 million word WPA-optimized dictionary on a 400 CPU cluster.

The stated purpose of the service is to assist penetration testers and network auditors who need to test the strength of WPA passwords that use pre-shared keys (PSK or personal mode). In this mode, the PSK master key is used to derive a session key from several parameters in the initial wireless connection handshake, including the MAC addresses of the client and base station. In practice, the PSK is a password and is therefore exposed to a brute force or dictionary attack.

Verifying a guess for the PSK password is relatively costly, roughly the equivalent of processing a megabyte of data, since the session key is derived from 4096 iterated hash operations. This high iteration count, or spin, limits the number of password trials to several hundred per second on a standard desktop. Marlinspike's cloud service searches through a 135 million word dictionary in just 20 minutes, a computation that would otherwise take 5 days or so on a standard desktop.

And all this for just $34, after a client has uploaded a capture of the WPA handshake on the network of interest. If the cloud service does not find a password then a client can be confident that the submitted password is resistant to dictionary attacks – no refund, you still pay for the assurance!

The Church Of Wifi has a project to create public rainbow tables for WPA based on common passwords, but the resulting tables only encode one million passwords because the tables must be recomputed for each network identifier (ESSN), which acts as salt in the password derivation function. Marlinspike opted to create his own WPA-optimized dictionary list which includes word combinations, phrases, numbers, symbols, and “elite speak”.

In Security Muggles I posted about finding ways to connect with management about security. Robert Graham, CEO of penetration testing company Errata Security, has commented that "When I show this to management and say it would cost $34 to crack your WPA password, it's something they can understand," he said. "That helps me a lot."

PC World has somewhat dramatically reported the announcement as New Cloud-based Service Steals Wi-Fi Passwords, suggesting that the cloud is reaching into wireless networks and grabbing passwords. In fact, the service is designed to trade-off a 5-day desktop computation against a 20-minute cloud computation for $34. Nonetheless, a better name would be WPA Auditor rather than WPA Cracker.

Sunday, December 6, 2009

A Threat Model for SSL

I traced a link to my post How fast are Debian-flawed certificates being re-issued? to SSL Shopper, a new site for me, and I looked back through their news items and found a link to an SSL Threat model proposed by Ivan Ristić, the principle author of ModSecurity and a leader in Apache security.

Referring to the origins of his threat model, Ivan states that

SSL is easy to use but also very easy to use incorrectly. The ecosystem, which is built of the specifications, the implementations, the CAs and the PKI, is full of traps, each of which is very easy to fall into. Once I started to spend significant time thinking about SSL I set out to build a model of the ecosystem, for my own education and to ensure that I understand it all. That's how I arrived to the SSL Threat Model.

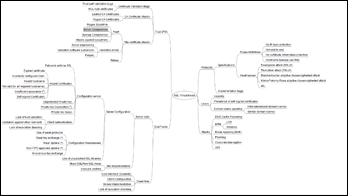

His threat model is represented as a FreeMind map, available as a graphic as shown below. The threat model considers clients, servers, PKI, protocols, users and attacks, and perhaps the model needs to be updated in light of the new The TLS Renegotiation Attack.

Ivan admits that the model needs some additional clarification, but it is probably more useful as a published draft rather than waiting for him to find the time to perfect the model (the same reasoning lead me to releasing my outline of a password book).

Tidbits: A5/1, WhiteListing and Google

There is a nice write-up in the very respectable IEEE Spectrum on the A5/1 rainbow table generation project run by Karsten Knol for cracking GSM encryption. The write-up for Knol in the IEEE is a signal that his project has mainstream acceptance and attention. The timeliness of Knol’s project was heightened a few weeks ago when MasterCard announced that they would be using GSM as an additional authentication factor in their transactions. This MasterCard feature may end up being very popular since IDC recently predicted that the mobile phone market will exceed one billion handsets by the end of 2010. Hopefully this huge demand will increase the deployment of the the stronger A5/3 algorithm that is being phased in during upgrades to 3G networks.

The evidence continues to mount that whitelisting is an idea whose time has come. Roger Grimes at InfoWorld published a large list of reviews on whitelisting products, and he was pleasantly surprised that “whitelisting solutions are proving to be mature, capable, and manageable enough to provide significant protection while still giving trustworthy users room to breathe”. Given that the amount of malware introduced in 2008 exceeded all known malware from previous years, viable alternatives to the current blacklisting model are needed.

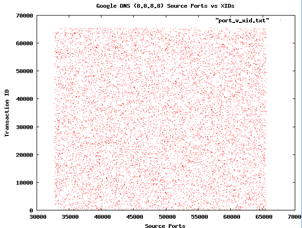

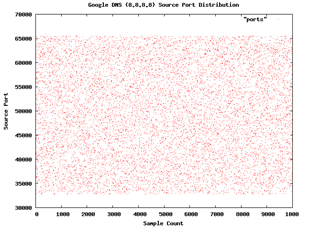

Finally, Google recently announced that it will be offering its own DNS service, nominally to increase performance and security. Sean Michael Kerner wonders whether Google DNS is resistant to the attacks reported by Dan Kaminsky last year, where common DNS implementations relied on poor random numbers. H. D. Moore has forwarded Kerner some graphs he made from sampling Google DNS for randomness. First, a plot on port scanning

and then a plot of source ports against transactions IDs

and then a plot of source ports against transactions IDs

In both cases the graphs look quite random, and so both Kerner and Moore conclude that Google seems to be a right track here. But Moore concedes that more testing to be done.

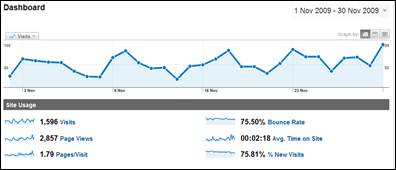

November Blog Round-Up

I normally don’t post monthly blog summaries, but last month there seemed to be quite a bit to write about. It was a good month for visits and page views, so thank you to everyone. Here is the No Tricks dashboard from Google Analytics (click to enlarge).

And a review of the posts

Opinion and Analysis

- The Other Google Desktop

- How fast are Debian-flawed certificates being re-i...

- The TLS Renegotiation Attack for the Impatient

- More on TMTO and Rainbow Tables

- The Internet Repetition Code

- Security FreeMind map sources

- Not so sunny for Whit Diffie

- Quadratic Football Revisited

- FUDgeddaboudit

- MasterCard bets on A5/1

- TLS Renegotiation Attack Whitepaper

- How big is 2^{128}?

Visualisations

- Visualisations of Data Loss

- Navigation map of the Cloud Ecosystem

- Growth of Wal-mart across America

- Death Star Threat Modeling

- mini-Bruce for $89

News

Improving Blog Navigation

Wednesday, December 2, 2009

Google Maps and Crypto Laws Mashup

Simon Hunt, VP at McAfee, has a great Google map mashup application that visually maps crypto laws to countries around the world, including individual US states. The map was last updated in September.

Monday, November 30, 2009

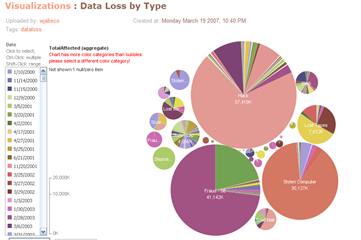

Visualisations of Data Loss

The detailed data collected by DataLossDB.org has been uploaded to two of the popular sites for visualization of data. The first is Swivel, and the graph from here shows the number of breached records as a percentage of the TJX incident where 45 million records were compromised (this is represented as 100% on the graph)

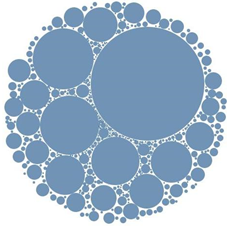

The “spikey” nature of the losses was identified by Voltage Security as the log-normal distribution. The DataLossDB data has also been uploaded to Many Eyes (several times it seems), and searching on data loss gives several graphs, like this one for data loss by type

You can use the loss data set at Many Eyes to produce other graphs of your own choice. Lastly, Voltage has a great graphic showing another representation of the relative size of data losses, which has a fractal feel to it

TLS Renegotiation Attack Whitepaper

I recently gave a simplified review of the TLS renegotiation attack. For additional technical details on the attack I recommend the 17-page whitepaper TLS and SSLv3 Vulnerabilities Explained by Thierry Zoller. He makes good use of protocol flow diagrams and considers the implications of the attack for HTTP, FTP and SMTP. He also describes fixes for the attack and a simple method to test for vulnerable servers uses OpenSSL.

Sunday, November 29, 2009

How big is 2^{128}?

I came across a 2006 email thread from John Callas, CEO of PGP, trying to dispel the perception that a government agency might have the computing power to break 128-bit keys. He recounts a characterization of the required effort, that he attributes to Burt Kaliski of RSA Data Security (now part of EMC)

Imagine a computer that is the size of a grain of sand that can test keys against some encrypted data. Also imagine that it can test a key in the amount of time it takes light to cross it. Then consider a cluster of these computers, so many that if you covered the earth with them, they would cover the whole planet to the height of 1 meter. The cluster of computers would crack a 128-bit key on average in 1,000 years.

So that’s 1,000 years of computation by a cluster that would envelope the earth to a height of one metre.

Callas’ point was that modern cryptosystems are essentially unbreakable against brute force attacks, and speculating over the computational power of three letter agencies against 128-bit keys is verging on paranoia. Breaking passwords – that protect accounts, data or larger cryptographic keys – is a much more credible scenario to consider. Callas claims that two thirds of people use a password related to a pet or loved one, and there is no need for a planet-sized cluster to guess those.

Friday, November 27, 2009

The Other Google Desktop

Two weeks ago the Economist ran an interesting article Calling All Cars, describing how systems such as OnStar (GM) and Sync (Ford) that were conceived for roadside assistance have expanded beyond their original service offerings to include remote tracking and deactivation (car won’t start), slowing down a moving car to a halt (no high speed chases), fault diagnosis and timely servicing. Even so, 60,000 OnStar subscribers a month still use the service to unlock their cars – the auto equivalent of password resets.

I have often wondered why AV vendors have not leveraged their platforms and infrastructure significantly beyond their initial offerings in the same way. The larger vendors that service enterprise customers have a sophisticated update network for clients, that feeds into corporate networks for secondary distribution internally. Desktops are equipped with software for searching against a database of signatures, accepting or initiating dynamic updates, plus monitoring and reporting. Surely this is a basis for useful enterprise applications beyond the necessary but not so business-friendly task of malware scanning?

It is widely reported that the traditional AV signature cycle of detect-produce-distribute is being overwhelmed, and the effectiveness of AV solutions is decreasing. So AV companies should be on the lookout for new and perhaps non-traditional functionality. But even if this was not the case it would be worthwhile to consider additional services bootstrapped off the installed base.

I think one generalization would be the extension of the search capability away from signature matching towards a Google desktop model - away from search-and-destroy to search-and-deliver. Imagine if Norton or Kaspersky were presented to users as document management systems permitting tagging, search of file content, indexing, and database semantics for files – that is, provide a useful service to users beyond informing them that opening this file would not be a good idea.

In the corporate setting, desktop document search and analysis could provide many useful functions. Let’s take data classification for example. I am not sure if we can ever expect people to label documents for data sensitivity. Even if people were resolved to be diligent in the new year, there would still be a large legacy problem. Imagine now that senior management could create a list of sensitive documents and then feed them into an indexer, which distributed data classification “signatures” to desktops. The local software can scan for matching documents (exact or related), create the correct labelling and perhaps even inform the users that such documents should be dealt with carefully, perhaps even pop-up the data classification policy as a reminder.

You could also track the number of copies and location of a given sensitive document, such as a drafts of quarterly financial results, which must be distributed for review but only within a select group. Again management could define which documents need to be tracked and feed them into a signature engine for propagation to desktops. If a document fell outside the defined review group, then a flag could be raised. When a sensitive document is detected as being attached to an email the user can be reminded that the contents should be encrypted, certainly if the recipients are external to the company, and perhaps prevented from even sending the document at all.

The general innovation here is to permit client management to define search and response functions for deployment within the AV infrastructure, extending beyond the malware updates from the vendor. I think there are many possible applications for managing documents (and other information) on the basis of the present AV infrastructure for distribution, matching and scanning, especially if local management could create their own signatures.

I have to admit that I am not overly familiar with DLP solution capabilities, and perhaps some of my wish list is already here today. I would be glad to hear about it.

Thursday, November 26, 2009

Death Star Threat Modeling

I was searching through the entries in my Google Notebook and came across a link to Death Star Threat Modeling, a series of three videos from 2008 on risk management by Kevin Williams. Sometimes we struggle to explain the notions of threat, vulnerability and consequence, but sit back and let Kevin take you through a tour of these concepts in terms of Star Wars and the defence of the Death Star. There are 3 videos and the link to the first is here, and the other two are easily found in the Related Videos sidebar.

How fast are Debian-flawed certificates being re-issued?

In 2008 it was discovered that the OpenSSL package in Debian had been producing low entropy public keys for about a year and a half on its Etch distribution. While it was relatively easy to patch the offending code (only a few lines), it was going to be more difficult to track down and re-issue all the weak public keys that had found their way into SSL server certificates. From my post on the topic

An article in the Register, called Debian's Epic SSL Blunder, states that the number of SSL certificates that may need replacing could be in the hundreds of thousands or even millions. So while the OpenSSL PRNG code can be easily patched, identifying and replacing all the weak keys generated by the flawed code is a big operational headache. It may be months or years before all the weak keys and their corresponding certificates are tracked down and upgraded.

At the Internet Measurement Conference (IMC) held in early November, researchers Scott Yilek, Eric Rescorla, Hovav Shacham, Brandon Enright, and Stefan Savage presented a study on the rate at which Debian-flawed SSL server certificates were being replaced. In short, the news could have been better.

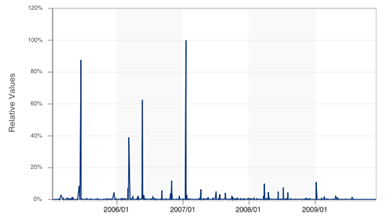

The researchers tracked a collection of approximately 50,000 public web servers over a period of 6 months. Initially around 1.5% of the servers (751 to be exact) were using Debian-flawed keys in their certificates, and the observed rate at which these certificate were being re-issued is shown in the graph below

The researchers stress that as compared to typical patching rates for general vulnerabilities, re-issuing certificates for the sample of weak servers was very slow. A long term study by Qualys reported this year that the patching half-life for vulnerabilities is 30 days, and so over a 6 month period we should see an exponential decrease in unpatched endpoints. However the graph above is approximately linear, and 30% of the Debian-flawed certificates were still not re-issued after almost 180 days. The authors conclude that

… unlike other vulnerabilities which have been studied and typically show a short, fast, fixing phase followed by levelling off, certificates were replaced on a slower cycle with substantial fixing extending well past five months after the announcement. We also found that in some cases certificate authorities continued to issue certificates to weak keys long after the vulnerability was announced.

Incidentally the researchers also found that approximately 2% of the sampled servers (1000 or so) were still using 512-bit RSA keys. While such keys are not as weak as those produced by the Debian flaw, recovering the associated private keys was recently shown to require nothing more than a 3-day desktop calculation. Nonetheless, this fraction of 512-but keys is a dramatic improvement over the results of a survey conducted in 2000 which found that almost a quarter of the 8,000 servers sampled were using 512-bit keys.

Wednesday, November 25, 2009

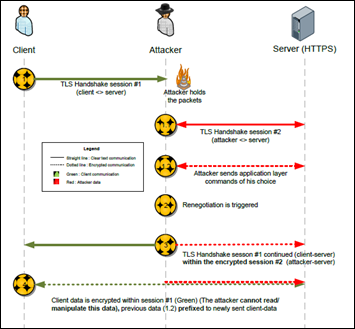

The TLS Renegotiation Attack for the Impatient

There are many posts and news articles of late on the TLS Renegotiation Attack. I had hoped that just by skimming a large number of these that some process of web osmosis would magically transfer an understanding of this vulnerability to me. But alas the topic has remained impenetrable and I had to dedicate some time to tracking down the details. By the end of this post you should have a better idea of what descriptions of the attack like this one actually mean

The vulnerability in the transport layer security protocol allows man-in-the-middle attackers to surreptitiously introduce text at the beginning of an SSL session.

The TLS Handshake

TLS has a handshake protocol that performs authentication, negotiates cryptographic parameters and generates a session key, called a bulk encryption key in TLS-speak. The cryptographic parameters include a key exchange/establish method (usually RSA or Diffie-Hellman), the bulk encryption algorithm and a hash algorithm for MAC-ing session data. Collectively this information is called a cipher suite, and the set of acceptable cipher suites are pre-defined by TLS.

So the TLS handshake negotiates a cipher suite for the client and server, as well as a matching bulk encryption key. Any time during an active TLS session either the client or server may choose to renegotiate the cipher suite. One reason for doing this would be to refresh a bulk encryption key that had been used to encrypt a large amount of session data. Also, the client for example could decide to change from AES128 to AES256 for additional security.

The point is that in all three cases – negotiating a initial cipher suite, refreshing a session key or upgrading a key – the TLS handshake protocol is used, and therefore these operations are indistinguishable to TLS. This is the vulnerability that is exploited by the attack.

Splicing TLS Sessions

To explain the attack further, let’s assume that there is a client, server and an attacker (the usual suspects). The attacker establishes a normal TLS session with the server, which we will denote as TLS(A, S). Now let’s assume that the attacker is able to hijack sessions from the client, so that any TLS traffic from the client is routed through the attacker.

When the client attempts to create a TLS session with the server - call it TLS(C, S) - the handshake data is sent to the attacker. Now the attacker sends this client handshake data into TLS(A, S) which will be interpreted by the server as a renegotiation of TLS(A, S). Note that this is possible since the handshake for initial negotiation and renegotiation looks identical. When the server replies the attacker just relays the message to the client, and continues relaying messages between the client and server until the handshake is complete and the session TLS(C, S) is created. Traffic between the client server will now be encrypted and unreadable by the attacker.

The point here is that an attacker can splice a client request for a TLS session with the server into an existing TLS session with the server that the attacker has established.

Plaintext Injection Attack

Even though splicing TLS sessions together is an unintentional property of the TLS protocol, it is not malicious as we have described it. It may even seem charitable of the attacker to give up his TLS session with the server for the client.

However some mischief can be undertaken. TLS is typically used to provide security for an application layer protocol, most often the HTTP traffic of a web application. Now if a partial or incomplete web command is sent to a web server then it will cache that partial command until the remainder of the command is received, whereupon the now complete command will be executed.

Recall that the attacker has created a TLS session with the server, TLS(A, S), and the attacker can send a partial HTTP command to the web server, which it caches, pending further input. When the attacker splices the client-requested TLS session TLS(C, S) into TLS(A, S) then the first HTTP commands and data sent by the client will be added to the chosen HTTP command of the attacker that is pending in the web server cache, and then executed as a valid command.

This is being called a plaintext injection attack (PIA) – even though the attacker does not have access to the encrypted TLS traffic, he can inject a portion of a HHTP command into the decrypted traffic sent by the client. It is also being called a prefix attack since the attacker is manipulating the initial part of the HTTP command.

The point here is that the attacker can create a pending HTTP command within a web application, and then have the command completed and executed using HTTP commands sent by the client session that is spliced into the attacker's TLS session with the server.

What have we learned?

Let’s return to the short summary of the renegotiation attack given at the beginning of this post

The vulnerability in the transport layer security protocol allows man-in-the-middle attackers to surreptitiously introduce text at the beginning of an SSL session.

The vulnerability being referred to is the inability of TLS to distinguish between initial and subsequent runs of the handshake protocol. This allows an attacker to splice together a TLS session of their own creation with a newly requested session by an unsuspecting client. The attacker can introduce (command) text at the beginning of the client’s web session since he can arrange for a partial command to be pending in the cache of the web server.

This may all seem a bit theoretical, but the Register recently reported a practical implementation of this attack by Turkish grad student Anil Kurmus to “steal Twitter login credentials that passed through encrypted data streams”.

The simple and immediate workaround for TLS is to disable session renegotiation, with the long term solution being to explicitly distinguish between the first and subsequent runs of the TLS handshake protocol. Eric Rescorla has a detailed post on the renegotiation attack, including both long and short term solutions.

Tuesday, November 24, 2009

Navigation map of the Cloud Ecosystem

Sunday, November 22, 2009

FUDgeddaboudit

I first came across the term fuhgeddaboudit in writing while reading the The Black Swan, where Taleb was answering the question as to whether journalists can be relied on to unearth all of the silent evidence on a given topic - fuhgedaboudit! The term is short for “forget about it”, popularized in US gangster media such as the Sopranos, which google defines as

I first came across the term fuhgeddaboudit in writing while reading the The Black Swan, where Taleb was answering the question as to whether journalists can be relied on to unearth all of the silent evidence on a given topic - fuhgedaboudit! The term is short for “forget about it”, popularized in US gangster media such as the Sopranos, which google defines as

- An issue is not worth the time, energy, mental effort, or emotional resources

- Whatever the current topic of discussion may be, regardless of who has stated it (even the speaker) is thereby declared null and void and without merit

Both of these sentiments were called forth when I read the recent post from Anton Chuvakin on FUD-based security. Anton was reminding us that FUD is alive and well in IT Security, and actually it has nowhere to go but up in terms of mindshare since more sophisticated methods, such as ROSI, have nowhere to go but down.

Even though FUD is a blunt instrument, Anton argues that it is very effective when it comes to getting things done, allowing real issues to be brought to the table, and limits reliance on decision makers to do the right thing (which they often don’t). He even jokes that FUD is a more pragmatic triad for security than the venerated CIA.

The whole post was ethically stomped on by RThomas (Russell Thomas) from the New School of Information Security blog (NSOIS) who stated in a comment that

FUD is the distorted and irrational exaggeration of fears and uncertainties for the sole purpose of manipulating the decision-maker.

The term "FUD" originated in the 1970s regarding IBM's selling tactics against competitors. The FUD technique was used to destabilize the decision-maker's thinking process regarding potentially viable alternatives. FUD issues raised could not really be answered by the decision-maker or the competitor, and so nagged at the back of the mind. They had the effect of causing the decision-maker to retreat to the safe decision, which was IBM. "Nobody ever got fired for buying IBM" was one famous phrase embodying the effects of FUD …

There are substantial reasons for framing risks in a way that goes beyond simple statement of facts and statistics, namely to deal with the psychology of risk. The ethical security or risk professional will take pains to present scenarios that are feared in a way that the decision-maker can understand and, most important, to see those scenarios in perspective relative to other possibilities and probabilities.

and Russ further drove home his point in an additional post over at the NSOIS, concluding that

Security is always a secondary objective to some other (upside) enterprise objectives. Security investments are always subject to evaluation relative to other investment alternatives, both inside and outside of IT. These are the realities of enterprise performance and leadership. Some security people may stomp their feet in protest, or resort to unethical tactics like FUD, but don’t delude yourself that you are making the world (or the enterprise) a better place.

This is the same sentiment that I addressed in my The Relegation of Security to NFR Status post. NFR stands for non-functional requirement and includes things like ensuring that there is sufficient network capacity, that the servers are adequately sized for peak loads, help desk support is created, back-up and recovery is deployed, the web interface is friendly, and so on. FUD is not really IT Security’s opportunity to get some skin back in the functional (i. e. business) requirements game, as we will still look like uninvited gate crashers at best, and bullies at worst.

At the recent CSI meeting in Washington, as reported by Dark Reading, with my take here in Security Muggles, several CSOs opined that we need better communication with business people on their terms so that Security people are earning a seat at the decision-making table. They want to do more RSVP-ing than crashing.

Wade Baker over on the Verizon blog recently asked how people make security decisions, beginning from the frank assumption that

In most cases, it is impossible to precisely formulate all factors in the decision, so we abandon the “scientific” route and revert to some other method of making it (see below). This is where our predominantly engineering mindset hurts us. Instead, we should realize that organizations have always made decisions using varying amounts of information of varying quality. Our dilemma is not new. Valid and vetted approaches exist for structured decision problems with an abundance of precise data and also for unstructured problems with sparse amounts of “fuzzy” data. These approaches are out there and are eagerly waiting for us to apply them to problems in our domain.

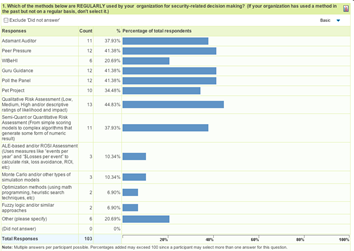

FUD can be seen as a response to this reality, but not a very structured response, and one that ignores the methods and techniques developed in other fields for coping with decisions under uncertainty. Wade also ran a little survey on the approaches that security people use for decision-making and he received just over 100 responses. You can read his summary of the response here, and his summary graph is below.

Even given the small sample size it seems that some people are opting away from FUD, far away in fact. I don’t think IT Security as a profession, or any profession (except maybe politics), has a long run future based on FUD since you don’t need much technical skill or experience to pursue this approach, and there are probably plenty of people for hire to carry out such campaigns who are not particularly well-qualified in security.

So ethical considerations aside, I have never considered FUD a long term strategy. Its persistence I imagine can be attributed largely to regular changes in the ranks of security decision makers, and a mind-numbing churn in technology and the IT sector as a whole. The same “new fears” are being presented to new people, as FUD has gone into heavy syndication in the IT Security industry and its always showing in re-runs somewhere. Put your time and energy somewhere else.

In short fuhgeddaboudit !

Saturday, November 21, 2009

MasterCard bets on A5/1

MasterCard recently announced (see here and here for example) that it will introduce out-of-band GSM authentication into its Chip Authentication Program. Consumers will be able to authenticate banking and other online transactions using their mobile phones, either by entering a password sent to the phone by SMS or generating the password directly on their smart phone via a java application.

MSN Money states that

This new development leverages the ubiquitous nature of mobile phones. According to latest research from Forrester, the number of individual mobile users in Europe will increase to 344 million users by the end of 2014, representing 84% of the Western European population. Coupled with growing online banking fraud activity (the UK Card Association reports that online banking fraud has jumped 132% in 2008 to stand at a record £52.5m.) the possibility to harness the mobile phone for authentication purposes is considerable.

This strategic decision makes the A5/1 rainbow table generation project, led by Karsten Knol, all the more timely. The project was announced at the Hacking At Random conference in August,and described in the paper Subverting the security base of GSM. Knol has stated that one of the reasons for the project to highlight the weaknesses of A5/1 encryption is the increased usage of GSM as an additional out-of-band authentication mechanism for online protocols, and the MasterCard announcement may well prove his point. The stronger A5/3 algorithm is being phased in during upgrades to 3G networks, providing secure 128-bit encryption, and this key size is effectively beyond the reach of rainbow attacks.

However Knol’s project appears to have hit a snag about 3 weeks ago. On October 29th it was announced that a critical bug was found

All versions prior to the one released on 25 of October 2009 use a buggy LFSR to generate round function values. Instead of producing 2^{64}-1 distinct values there are in fact only 32. This means that tables that ought to not produce chain merges in the event of a collision because of different round functions do produce merges.

The message is a bit cryptic but it points to a fundamental coding error in the linear feedback shift register (LFSR) components of A5/1 (it has three). Its appears that the period (the number of clock cycles before the sequence produced by the LFSRs repeats) is only 32 as opposed to the much much larger 2^{64}-1. This bug reminds me of the problem with Debian’s random number generator for OpenSSL, where a programming error was causing keys to be generated from at most 15-bits of entropy.

The A5/1 bug has been fixed and let’s hope it does not delay the project too much. Meanwhile, keep your phone handy for future MasterCard transactions.

Growth of Wal-mart across America

There is a great visualization of the spread of Wal-mart stores across America at FlowingData. The visualization starts with the first Arkansas store in 1962 and plots the sprouting of additional locations all the way up to 3176 stores in 2006. The data for the visualization can be found here, researched for the paper “Diffusion of Wal-Mart and Economies of Density”.

Thursday, November 19, 2009

Not so sunny for Whit Diffie

Renowned cryptographer Whitfield Diffie has apparently left his position as chief security officer at SUN, according to a recent article at the MIT Technology Review, who were interviewing Diffie on the security of cloud computing. The Register speculates over the reasons for Diffie’s departure from SUN after 18 years of service, suggesting that Oracle “is a company known for making its dollars count rather than indulging meta thinking”. Diffie is currently a visiting professor at Royal Holloway, University of London, which runs perhaps the most respected IT Security graduate program in Europe, while also maintaining an excellent group of researchers.

Renowned cryptographer Whitfield Diffie has apparently left his position as chief security officer at SUN, according to a recent article at the MIT Technology Review, who were interviewing Diffie on the security of cloud computing. The Register speculates over the reasons for Diffie’s departure from SUN after 18 years of service, suggesting that Oracle “is a company known for making its dollars count rather than indulging meta thinking”. Diffie is currently a visiting professor at Royal Holloway, University of London, which runs perhaps the most respected IT Security graduate program in Europe, while also maintaining an excellent group of researchers.

And what are Diffie’s thoughts on clouds computing? His first statement is quite telling

The effect of the growing dependence on cloud computing is similar to that of our dependence on public transportation, particularly air transportation, which forces us to trust organizations over which we have no control, limits what we can transport, and subjects us to rules and schedules that wouldn't apply if we were flying our own planes. On the other hand, it is so much more economical that we don't realistically have any alternative.

Cloud computing literally turns all our conventional security assumptions inside-out, but Diffie, like others, sees the economic sense, if not the economic certainty. A recent brief on cloud computing by the Economist could spare no more than a few sentences to discuss the security risks. The large economic wheels are turning inexorably toward adoption. Diffie goes on to say that

The whole point of cloud computing is economy: if someone else can compute it cheaper than you can, it's more cost effective for you to outsource the computation.

At the moment companies face an unsatisfying choice: either encrypt data for secure storage in the cloud, forgoing the benefits of cloud computations, or leave it in the clear for maximum computational utility but with a risk of loss or exposure. Diffie mentioned a third alternative, computing with encrypted data, but at present this alternative is not viable. I assume he is referring to the recent encryption breakthrough by Craig Gentry of IBM which could be used to perform searches on encrypted data, albeit 1 trillion times more slowly than Google does today.

In the short term (and maybe the longer term as well) Diffie sees the cloud as a matter of trust. He advises to pick your supplier like you pick your accountant.

Wednesday, November 18, 2009

mini-Bruce for $89

You can now order an action figure of Bruce Schneier for $89, already down from $100 earlier in week.

From the supplier

"Get an action figure of Bruce Schneier a.k.a. CryptoMan! Buy Bruce's lifelike head mounted on a 12'' action figure body with pre-fitted clothes.

This package includes Bruce Schneier's custom action figure head mounted on a matching DiD or Dragon action figure body with a choice of 2 different clothing styles. You can also buy Bruce Schneier's head on its own and fit it onto your own figurines."

Bruce's take is here.

Tuesday, November 17, 2009

More on TMTO and Rainbow Tables

I recently posted about the new project to compute rainbow tables for the A5/1 encryption algorithm of GSM. As is often the case, I end up writing more than can fit in a reasonable length post (though that does not stop me from occasionally writing apparently unreasonable length posts such as here and here). Rainbow tables, and more generally Time-Memory trade-off (TMTO) tables, are fascinating subjects that I have a bit more to say about.

Babies and Giants

I think the best place to start with understanding TMTO is to return to one its first applications, which turns out to have implications for the security of public key systems. The Baby-steps Giant-steps method was proposed by the number theorist Daniel Shanks in the early 70's to solve the discrete logarithm problem (DLP). We recall that in the DLP we are given a generator g of the prime p and a value 0 < X < p, and we seek the value x such that

X = g^x mod p

Since g is a generator then { g, g^2, g^3, ..., g^(p-1) } is equal to {1, 2, 3, ..., (p-1)}. That is, every non-zero number less than p can be expressed as a power of g mod p. So we know that X has a logarithm to the base g, but how do we find it? The brute force approach is to just start searching through 1, 2, 3, ... until we reach x, which is equivalent to computing the following sequence of exponentiations (note that we omit the "mod p")

g, g^2, g^3, ... , g^{x-1}, g^x = X

That is, we start with g and keep multiplying by g until we reach X. But there is another approach to finding X based on the following sequence

g*X, g^2*X, g^3*X, ... , g^{p-2}, g^{p-1} = 1

In this second sequence we start with X and keep multiplying by g and stop when we reach 1. We know from Fermat's Little theorem that the log of 1 must be (p-1), and since g is a generator, no other value smaller than (p-1) will exponentiate to 1. Now to determine x, if it took k multiples to reach 1 from X then it follows that x = (p-1) - k.

So which approach is better? Well if we write the sequences as exponents, then the first sequence is 1, 2, 3, ..., x while the second is x, x+1, x+2, ..., (p-1). On average both sequences are about p/2 steps in length if we assume x is randomly chosen. However the second sequence contains the germ of a good idea, which is as follows. Even though we do not know the value of x we can increment it towards a value whose log we do know, and then subtract off the number of increments to determine x. In another way, repeatedly multiplying X by g increments the target value x towards 1 which has the known log of (p-1), and x is just (p-1) less the number of increments.

Now we can generalise this approach by creating more values with known logs. For example, we can first compute g^{(p-1)/2} and then start our search sequence as before

g*X, g^2*X, g^3*X, ...

but this time stopping if we reach 1 = g^{p-1} or g^{(p-1)/2}. If we consider the sequence 1, 2, 3, ..., (p-1), then (p-1)/2 is the midpoint and (p-1) is the endpoint, and x will reach (p-1)/2 first if it is in the first half of the list, and will reach (p-1) first if it is in the second half of the sequence. The point is that the average distance from x to one of these two known points is now about p/4, and in fact x must reach one of the known value after at most p/2 steps.

But we can just keep going and introduce more known points. For 3 points we could use the exponents (p-1)/3, 2(p-1)/3 and (p-1), where we need to round to the nearest integer as required. Now the search sequence x, x+1, x+2, ... can be stopped whenever it encounters one of these three values, which will occur after at most p/3 steps or p/6 steps on average. In general to pre-compute k known points that can be used to partition 1, 2, 3, ..., (p-1) into k non-overlapping segments, take multiples of p/k (rounded to the nearest integer).

As it turns out the optimal choice of k that trades-off pre-computation time of the known points and the search time is k = sqrt(p). The Baby-steps Giant-steps (BSGS) name comes from first computing the exponent sequence sqrt(p), 2*sqrt(p), 3*sqrt(p) ..., which are considered the giants steps space at intervals of sqrt(p). Then to find the nearest Giant step we next compute the exponent sequence x, x+1, x+2, ... x + sqrt(p), which we think of as Baby steps of unit increments.

What principle is at work here?

The point is to improve over the brute force approach of just searching through 1, 2, 3, ... until we reach x, and by "improve" we mean reduce the number of elements to be searched before we find x. In the BSGS the improvement is achieved by searching forward from X to a known value rather than starting at an arbitrary value and searching forward towards X. We can pre-compute a set of values or points in the keyspace which limits the number of values that need to be considered in the searching forward process. But the whole searching forward process exists because we are dealing with a cyclic group, and repeated multiplication by g the generator moves us forward through the keyspace. The more values that are pre-computed, the shorter the time for forward search.

Hellman's TMTO for Block Ciphers

In 1980 (around the time Kerberos was coming into being) the famous cryptographer Martin Hellman, co-inventor of public key cryptography, proposed what he called a time-memory trade-off (TMTO) for recovering keys for block ciphers. His goal was to pre-compute a set of values that would limit the fraction of the keyspace that would need searching to find a particular key. This sounds like BSGS, but we have a big problem in that practical block ciphers are not cyclic - no one encryption transformation can be used to generate all the other possible set of encryptions under different keys.

The Ideal TMTO

To explain TMTO let's consider a cipher for which the length of the plaintext, ciphertext and key are all the same. So this is the case for AES-128 for example, but is not true for DES or AES-256, and the TMTO attack has to be modified for these latter cases. The idea is to build a TMTO table that covers all the possible encryptions of a given plaintext P.

The attacker starts by selecting a random key R1 and then encrypts P to produce C1 = E(R1, P), which can also be interpreted as another key K1 = C1 since keys are ciphertexts are bit strings of the same length. The attacker then encrypts P with K1, to yield K2 = E(K1, P) an so on. Assume that the attacker repeats this operation N times to produce a sequence of keys

R1, K1, K2…, K(N-1), KN = S1

where R1 is called the start point and S1 is called the endpoint, and overall the sequence of encryption is called a chain. The attacker then stores the pair (R1, S1) to represent the first row of the TMTO table. Then the attacker selects another start point key R2, and produces another chain of length N represented by the pair (R2, S2). More chains are generated, using different random keys for starting points, until all or a large fraction of the key space is expected to be covered by (or represented in) the chains of the table.

Let the attacker produce M table rows in this fashion at a cost of computing M chains of length N and a storage cost of 2*N*M (the start and end point for each chain). How large should N and M be made? Well the attacker wants each possible key to appear in some chain of the table. So if the key has n bits, then selecting N = M = sqrt(2^n) = 2^{n/2}, will ensure that N*M is approximately 2^n. Note that, if everything works out nicely, we get a compressed form of the keyspace, where the keys have been reordered into chains and each chain is compressed just be storing its start- and endpoints.

Computing these tables will be a large effort, proportional to the number of possible keys. But the advantage here is that once computed, the tables can be repeatedly used to break keys efficiently, which is achieved as follows. Say an attacker has a plaintext-ciphertext P, C computed under some unknown key K. What the attacker can do is then create chain beginning with C as the startpoint, and extends the chain until an endpoint in the table is reached. The found endpoint then defines the row of the table where K lies, and the attacker can then use the startpoint for the row to re-compute the keys of the row, searching until K is found. The advantage over a brute-force search is that the attacker will need to only search over one row in the table, which will be much smaller than searching over the whole key space.

The TMTO Principle at Work

Before we consider the practical issues with implementing TMTO as described above, let's step back and look at the principle of TMTO and draw out its advantages. If an attacker is given a plaintext-ciphertext pair (P,C) then to perform an exhaustive search the attacker selects some order for the keys, and then performs trial encryptions until a key K is found that encrypts P to C. So the attacker has only one point in the key space which can be used to stop the search. This is pretty much the same as trying to solve the DLP as described above by searching through 1, 2, 3, ..., x - you only have one stopping point in your search.

The principle of TMTO is to identify more keys that can be used to terminate a search or define a relatively small interval of the keyspace where K must lie. Each (key) chain of a TMTO table defines such a interval, and when the attacker starts a chain using C as the initial key, then reaching an endpoint indicates the row that contains K. In the ideal case described above, the attacker can store about 2^{n/2} end points which can then be used to identify a subset of 2^{n/2} keys that must contain K. Thus the search for the key K is reduced to about 2^{n/2} encryptions as opposed to 2^{n-1} encryptions on average for an exhaustive search. So the endpoints in the table correspond to the giant-steps in the BSBG method, and the searching forward from a given ciphertext roughly corresponds to the baby-steps.

TMTO in Practice

We have left out many details in the description above but we are mainly giving the idea behind the TMTO principle. The main problem in practice is that the chains as not very well-behaved. What we want is that each chain produces distinct values and also that distinct chains produce distinct values as well - in short, one new and distinct key for each chain encryption. However due to the random properties of encryption we cannot expect this, which reduces the coverage of the keyspace in the tables. More chains need to be generated to compensate.

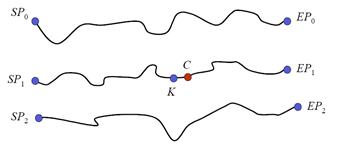

The diagram below (taken from here) shows nicely behaved chains, with startpoints (SP) and endpoints (EP) that neatly cover some distinct subset of the keyspace. The red dot shows a given ciphertext that when iterated will lead to the endpoint EP1, and then the chain can be reconstituted from SP1 and searched forward for the key K, which is the predecessor to C in the chain.

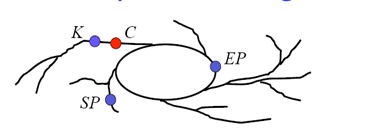

In practice, constructing TMTO tables that give a high probability of recovering keys is much more messy than described for the ideal case. The main issue is that each key from the keyspace should appear in some row of the TMTO table. However chains based on iterating (repeatedly applying) an encryption function need not produce distinct keys in a given chain, or produce distinct keys between different chains. A given chain can loop back upon itself or two chains may hit upon a common key and then merge in the table. In both cases the coverage of the keyspace in the TMTO table is reduced. It is therefore possible that the chain constructed by the attacker using C as the initial key may not reach an endpoint in the table. Even if it does, regenerating the endpoint chain may not lead to C since the chain for C may start outside the table and only merge into some row of the table. In practice chains can look more like the diagram below, snaking and merging so that K need not even be a predecessor on the chain beginning at SP, even though iterating C forward leads to EP.

Also we have assumed that each encryption in a chain defines a new key, but this is only true when keys and ciphertexts are the same bit length. When this is not the case then an additional function must be used to reduce (or expand) the ciphertext to match the stated key length.

Most of these difficulties are addressed in a collection of optimizations on TMTO tables that result in rainbow tables, originally devised by Philippe Oechslin in 2003 in the context of improving TMTO for recovering Windows NT passwords. The optimizations are subtle but the basic idea is to introduce a series of iteration functions (not just encryption) that also incorporates the position in the chain as a parameter in the iteration, which reduces the looping and merging of chains. Even so, the parameters need to be carefully chosen to ensure appropriate coverage of the keyspace.

Rainbow Tables for A5/1

Given this background we can now take a more informed look at the rainbow chain parameters being proposed by Karsten Knol in his recent project for producing rainbow tables for A5/1. The opening sentence on the page states that the tables are a mixture of 2 concepts, namely distinguished points and rainbow tables.

Distinguished Points (DP) are an optimisation, suggested by Ron Rivest, to reduce the number of endpoint lookups. In theory such a lookup must be made after each iteration, which in practice means a costly disk access outside the cosy realm of RAM. The DP optimisation is to create endpoints which have a simple distinguishing property, such as the first or last 10 bits being all zero. Thus the iteration function need only be interrupted for a disk search if the next chain value has this distinguished property.

Each A5/1 chain has an average length of 2^{20}, and each rainbow table is expected to have 2^{28.5} such chains. To get the right coverage just over 2^8 tables need to be generated and stored for a total effort of 2^{57}. These 2^8 tables will be generated in a distributed manner (by different nodes), and it is part of Knol's project to find volunteers for this effort. The total storage for the rainbow tables is about 2.5 TB or just 6 GB per node for the distributed network that Knol is proposing. To find a key from a given ciphertext requires examining about 3.5 billion chain values, which on one machine with a good graphics card will take about 2 hours, or just over a minute from the network.

However the project page cautions about the accuracy of these estimates and some data is presented on the likelihood and extent of chain merging. The objective of the above chain calculation is to cover just under 2^{49} elements in the keyspace, but this apparently is optimistic. Some heuristics for coping with degenerate chains are discussed but it seems that we will have to wait for the project to complete (that is, for the tables to be generated) to determine the success rate.

Final Remarks

Research into TMTO-based cryptanalysis continues, since on the one hand, there are many variations and optimizations of TMTO that can be applied, while on the other, cheaper hardware and faster processors make this attack approach more viable, particularly for ciphers like A5/1 for which brute-forcing the key length is on the boundary of a feasible computation. If you want to read more about TMTO then Karsten Knol has a great article here on the topic, as well as this excellent survey paper from Mark Stamp.

Monday, November 16, 2009

All Posts on the Birthday Paradox

I have collected all my Birthday Paradox posts here for convenient reference

The Internet Repetition Code

In engineering, the signal-to-noise ratio, denoted S/N, compares the strength of a given signal to that of the noise that is distorting the signal. The S/N measure has been used more generally to describe the level of meaningful information as compared to spurious data. For example, the percentage of SPAM in email might be thought of as an S/N measure. About a year ago Dave Armano posted about the falling S/N rate on the internet in general, and how Twitter will be acting as a social filter to improve signal strength. We can squeeze a little more out of the S/N metaphor, à la Moore's Lore and Attention Crash.

The S/N term is from communication engineering referring to errors induced in data during transmission - what is sent is often not what is received, if it is received at all. The traditional source of noise is mother nature, omnipotent and largely benevolent to our purposes, altering bits through lightening, static, surges and so on.

Coding theory is the study of how to add redundancy (additional bits) to data so as to detect and correct errors (noise) induced by the communication channel. One of the simplest codes is the aptly named repetition code. In such codes each bit of the message is repeatedly sent an odd number of times so that a simple majority decode rule can be followed to recover the original message. For example, the repetition code may send each bit three times and then decide to decode a given bit as a one if two or more – the majority – of the received bits for any message bit are in fact ones. So in general, if each bit is repeated 2*K + 1 times then the code can tolerate up to K errors (bit flips from one to zero, or vice versa).

Repetition codes are not very efficient as they increase the size of a given message by a factor of 3 or 5 say, depending on the repetition parameter. The redundancy added by a repetition code is just additional copies of the message itself, whereas more sophisticated codes add a smaller more intelligent amount of redundancy that can specifically pin point errors and correct them. More compact and intelligent forms of redundancy come at a cost of additional message processing for both the sender and the receiver. A definite advantage of repetition codes is simplicity both in encoding, and particularly decoding – just keep a tally of the count for the received zeroes and ones for each message bit and decode the sent bit as the larger of the two counts.

Even so, repetition codes are not particularly popular, but most courses on coding theory use these codes to establish some basic concepts. For example, if bits in the channel flip independently with probability p then it is a nice exercise in binomial summation to compute the probability that the correct message will be received after decoding. There was an unexpected application of repetition codes in security last year when researchers in the Cold Boot Attack used these codes to analyse the best way to extract information key bits from RAM.

More generally, repetition codes are suitable for application in communication environments that are subject to fading, and we can certainly think of powerless RAM as a fading channel, with bits decaying towards their ground state. Fading is produced not just by loss or attenuation of signal, but also by a signal “colliding with itself”, as it arrives at the receiver through multiple paths, in particular when the environment of the receiver has objects that act as reflectors. The signal bounces off these reflectors and arrives multiples times at the receiver, superimposed upon itself. In this case repetition codes are effective in recovering the original message from amongst the many versions of itself.

Now let’s move up the communication stack, away from network mediums and their codings, towards the layer of applications and content, where Mr. Armano is looking for Twitter to filter content into a more favourable S/N ratio. Here signal and noise are no longer physical combatants – there is just what you like and what I like, by the way of content.

For each of us the web is a noisy channel, which we express through the need to search, subscribe, aggregate, recommend, post, tweet – in short a great cull of what finds its way onto our screens. Nicholas Carr has described the web, in particular the blogosphere, as a great echo chamber, with too many posts chasing too little content. Largely then, a high-level repetition code is in operation.

The fading channel where this repetition code thrives is, of course, our attention span. Messages ricochet from one reflective source to another, with content vying to sink into the collective consciousness of the web readership. While there is plagiarism of content, by an internet repetition code we mean those relatively minor adjustments that increase the visibility of the same base content while giving downstream authors some sense of originality or contribution.

The miniskirt theory of web readership states that each message has just over 60 words to pass the “So what?” criterion of short-term attention. A repetition code seems the best strategy here - increase the retention of long-term memory by increasing the number of entries into short-term memory. And all this works well when bandwidth is plentiful and cheap, and participants are youthful, motivated and awash with time.

Twitter itself is a wonderful example of a repetition code - the whole premise of the service is to provide massive asynchronous mirroring of short content. Will Twitter cut through the Internet repetition code, and boost the signal for Mr. Amano? The answer is yes of course, but only for a while. Twitter as a pure repetition or subscription service is already on the decline, and a microcosm of clients, tools, tagging and groups has been overlaid to make it look and feel less like raw Twitter. So we are now culling already culled content.

Welcome to the repetition of Web 2.0.